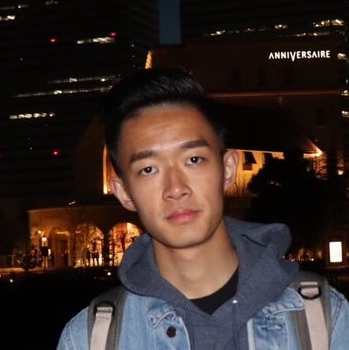

About Me

I am a third-year Ph.D. student in the UCI-NLP at the University of California, Irvine, where I am fortunate to be advised by Sameer Singh. My current research interests are centered around (1) natural language reasoning and (2) understanding and evaluating NLP systems.

Previously, I got my M.S. in Computer Science from ETH Zurich, advised by Mrinmaya Sachan. Before that, I obtained a B.S. in Theoretical and Applied Mechanics from Peking University, where I worked with Yizhou Wang. I also spent a wonderful half year as a research intern at EPFL, working with Antoine Bosselut prior to joining UCI.

Email: [firstname].fei@uci.edu

Recent News

- 2025 - Our nudging paper is selected for oral and Panel presentation at ACL 2025 (0.8% 25/3000+)!

- 2025 - Started an intership @ Amazon Rufus

- 2025 - One paper accepted to ACL 2025

- 2024 - Check our new preprint on inference-time alignment! [website]

- 2023 - Paper accepted to EMNLP 2023 “Towards a Mechanistic Interpretation of Multi-Step Reasoning Capabilities of Language Models”! [pdf] [code]

- 2023 - Joined the UCI-NLP.

- 2023 - Paper accepted to ACL 2023 “Mitigating Label Biases for In-context Learning” [pdf] [code]

- 2022 - Paper accepted to EMNLP 2022: “Beyond prompting: Making pre-trained language models better zero-shot learners by clustering representations” [pdf] [code]

- 2022 - Started research internship at EPFL supervised by Antoine Bosselut.

- 2022 - Graduated with a M.S. in Computer Science with distinction from ETH Zurich.

Publications and Preprints

MoCo: A One-Stop Shop for Model Collaboration Research

Shangbin Feng, Yuyang Bai, Ziyuan Yang, Yike Wang, Zhaoxuan Tan, Jiajie Yan, Zhenyu Lei, Wenxuan Ding, Weijia Shi, Haojin Wang, Zhenting Qi, Yuru Jiang, Heng Wang, Chengsong Huang, Yu Fei, Jihan Yao, Yilun Du, Luke Zettlemoyer, Yejin Choi, Yulia Tsvetkov

Under Review

[paper] [code]

Nudging: Inference-time Alignment of LLMs via Guided Decoding

Yu Fei, Yasaman Razeghi, Sameer Singh

ACL 2025 (🚀 Oral and panel 0.8% (25/3000+))

[paper] [code] [website] [demo] [video] [slides]

Towards a Mechanistic Interpretation of Multi-Step Reasoning Capabilities of Language Models

Yifan Hou, Jiaoda Li, Yu Fei, Alessandro Stolfo, Wangchunshu Zhou, Guangtao Zeng, Antoine Bosselut, Mrinmaya Sachan

EMNLP 2023

[paper] [code]

Mitigating Label Biases for In-context Learning

Yu Fei, Yifan Hou, Zeming Chen, Antoine Bosselut

ACL 2023

[paper] [code]

Beyond prompting: Making pre-trained language models better zero-shot learners by clustering representations

Yu Fei, Ping Nie, Zhao Meng, Roger Wattenhofer, Mrinmaya Sachan

EMNLP 2022

[paper] [code]

Align, attend and locate: Chest x-ray diagnosis via contrast induced attention network with limited supervision

Jingyu Liu, Gangming Zhao, Yu Fei, Ming Zhang, Yizhou Wang, Yizhou Yu

ICCV 2019

[paper]